Parents Should Not Post Children's Photos Online, Warn Safety Experts

Authored by Masooma Haq via The Epoch Times (emphasis ours),

With children spending an increasing amount of time on the internet and many uploading photos to their social media accounts, sexual predators continue to steal these images to produce child sexual abuse material (CSAM).

Further compounding the proliferation of CSAM is the easy access to artificial intelligence (AI), and law enforcement agencies and child protective organizations are seeing a dramatic rise in AI-generated CSAM.

Yaron Litwin is a digital safety expert and chief marketing officer at Netspark, the company behind a program called CaseScan that identifies AI-generated CSAM online, aiding law enforcement agencies in their investigations.

Mr. Litwin told The Epoch Times he recommends that parents and teens not post photos on any public forum and that parents talk to their children about the potential dangers of revealing personal information online.

“One of our recommendations is to be a little more cautious with images that are being posted online and really try to keep those within closed networks, where there are only people that you know,” Mr. Litwin said.

The American Academy of Child and Adolescent Psychiatry said in 2020 that on average, children ages 8 to 12 spend four to six hours a day watching or using screens, and teens spend up to 9 hours a day on their devices.

Parents Together, a nongovernmental organization that provides news about issues affecting families, released a report in 2023 (pdf) stating that "despite the bad and worsening risks of online sexual exploitation, 97% of children use social media and the internet every day, and 1 in 5 use it 'almost constantly.'"

One of Netspark’s safety tools is Canopy, an AI-powered tool that gives parents control to filter out harmful sexual digital content for their minor children, said Mr. Litwin, while giving children freedom to explore the internet.

Exploitative Content Expanding

The amount of CSAM online has gone up exponentially since generative AI became mainstream at the start of 2023, Mr. Litwin said. The problem is serious enough that all 50 states have asked Congress to institute a commission to study the problem of AI-generated CSAM, he said.

“There's definitely a correlation between the increase in AI-generated CSAM and when OpenAI and DALL-E and all these generative AI-type platforms launched,” Mr. Litwin said.

The FBI recently warned the public about the rise of AI-generated sexual abuse materials.

“Malicious actors use content manipulation technologies and services to exploit photos and videos—typically captured from an individual’s social media account, open internet, or requested from the victim—into sexually-themed images that appear true-to-life in likeness to a victim, then circulate them on social media, public forums, or pornographic websites,” said the FBI in a recent statement.

Mr. Litwin said that to really protect children from online predators, it is important for parents and guardians to clearly discuss the potential dangers of posting photos and talking to strangers online.

“So just really communicating with our kids about some of these risks and explaining to them that this might happen and making sure that they're aware,” he said.

Dangers of Generative AI

Roo Powell, founder of Safe from Online Sex Abuse (SOSA), told The Epoch Times that because predators can use the image of a fully-clothed child to create an explicit image using AI, it is best not to post any images of children online, even as toddlers, she said.

“At SOSA, we encourage parents not to publicly share images or videos of their children in diapers or having a bath. Even though their genitals may technically be covered, perpetrators can save this content for their own gratification, or can use AI to make it explicit and then share that widely,” Ms. Powell said in an email.

While some people say AI-generated CSAM is not as harmful as images depicting the sexual abuse of real-life children, many believe it is worse.

AI-generated CSAM is produced much more quickly than conventional images, subsequently inundating law enforcement with even more abuse referrals, and experts in the AI and online parental control space expect the problem to only get worse.

In other cases, the AI-generated CSAM image could be created from a photo taken of a real-life child’s social media account, which is altered to be sexually explicit and thus endangers those otherwise unvictimized children, as well as their parents.

In worst-case scenarios, bad actors use images of real victims of child sexual abuse as a base to create computer-generated images. They can use the original photograph as an initial input, which is then altered according to prompts.

In some cases, the photo’s subject can be made to look younger or older.

In 2023, the Standford Internet Observatory at Stanford University in conjunction with Thorn, a nonprofit focused on technology that helps defend children from abuse, released a report titled "Generative ML and CSAM: Implications and Mitigations," referring to generative machine learning (pdf).

“In just the first few months of 2023, a number of advancements have greatly increased end-user control over image results and their resultant realism, to the point that some images are only distinguishable from reality if the viewer is very familiar with photography, lighting and the characteristics of diffusion model outputs," the report states.

Studies have shown a link between viewing CSAM and sexually abusing children in real life.

In 2010, Canadian forensic psychologist Michael C. Seto and colleagues reviewed several studies and found that 50 to 60 percent of people who viewed CSAM admitted to abusing children themselves.

Even if some AI-generated CSAM images are not created with the images of real children, the images fuel the growth of the child exploitation market by normalizing CSAM and feeding the appetites of those who seek to victimize children.

Sextortion on the Rise

Because of how realistic it is, AI-generated CSAM is facilitating a rise in cases of sextortion.

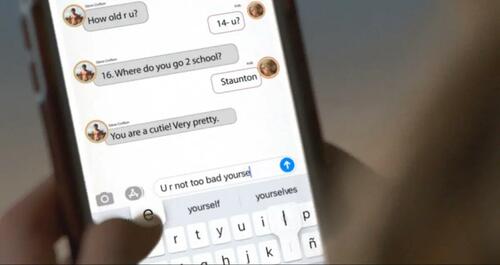

Sextortion occurs when a predator pretends to be a young person to solicit semi- or fully-nude images from a victim and then extorts money or sexual acts from them under threat of making their images public.

In the case of AI-generated cases, the criminal can alter the victim’s image to make it sexual.

In one case, Mr. Litwin said a teenage weightlifting enthusiast posted a shirtless selfie that was then used by a criminal to create an AI-generated nude photo of him to extort money from the minor.

In other cases, the perpetrator might threaten to disclose the image, damaging the minor’s reputation. Faced with such a threat, many teens comply with the criminal's demands or end up taking their own lives rather than risk public humiliation.

The National Center for Missing and Exploited Children (NCMEC) operates the CyberTipLine, where citizens can report child sexual exploitation on the internet. In 2022, the tip line received over 32 million reports of CSAM. Although some of the reports are made multiple times about a single viral child sex abuse image, that is still an 82 percent increase from 2021, or close to 87,000 reports per day.

In December 2022, the FBI estimated 3,000 minor sextortion victims.

“The FBI has seen a horrific increase in reports of financial sextortion schemes targeting minor boys—and the fact is that the many victims who are afraid to come forward are not even included in those numbers,” said FBI Director Christopher Wray in a 2022 statement.

The Parents Together report further states that “recent research shows 1 in 3 children can now expect to have an unwelcome sexual experience online before they turn 18.”

In addition, a 2022 report by Thorn (pdf) states that 1 in 6 children say they have shared explicit images of themselves online, and 1 in 4 children say the practice is normal.

Using Good AI to Fight Bad AI

Prior to the wide availability of AI, editing and generating images required skills and knowledge of image editing software programs. However, AI has made it so quick and easy that even amateur users can generate life-like images.

Netspark is leading the fight against AI-generated CSAM with CaseScan, its own AI-powered cyber safety tool, said Mr. Litwin.

“We definitely believe that to fight the bad AI, it's going to come through AI for good, and that's where we're focused,” he said.

Law enforcement agencies must go through massive amounts of images each day and are often unable to get through all of the CSAM reports in a timely manner, said Mr. Litwin, but this is exactly where CaseScan is able to assist investigators.

Unless the police departments are using AI-centered solutions, police spend an extensive amount of time assessing if the child in a photo is a fake AI-generated or an actual sexual abuse victim. Even before AI-generated content, law enforcement and child safety organizations were overwhelmed by the immense volume of CSAM reports.

Under U.S. law, AI-generated CSAM is treated the same as CSAM of real-life children, but Mr. Litwin said he does not know of any AI-generated CSAM case that has been prosecuted, so there is no precedent yet.

“I think today it's hard to take to court, it's hard to create robust cases. And my guess is that that's one reason that we're seeing so much of it,” he said.

To prosecute the producers of this online AI-generated CSAM, laws need to be updated to target the technologically advanced criminal activity committed by sexual predators.

Mr. Litwin said he believes predators will always find a way to circumvent technological limits set up by safety companies because AI is constantly advancing, but Netspark is also adapting to keep up with those that create AI-generated CSAM.

Mr. Litwin said CaseScan has enabled investigators to significantly reduce the amount time it takes to identify AI-generated CSAM and lighten the mental impact on investigators who usually must view the images.

Tech Companies Must Do More

Ms. Powell said social media companies need to do more in the fight against CSAM.

“To effectively help protect kids and teens, Congress can mandate that social media platforms implement effective content moderation systems that identify cases of child abuse and exploitation and escalate those to law enforcement as needed,” she said.

“Congress can also require all social media platforms to create parental control features to help mitigate the risk of online predation. These can include the ability for a parent user to turn off all chat/messaging features, restrict who’s following their child, and manage screen time on the app,” she added.

In April, Sen. Dick Durbin (D-Ill.) introduced the STOP CSAM Act of 2023, which includes a provision that would change Section 230 of the Communications Decency Act and allow CSAM victims to sue social media platforms that host, store, or otherwise make this illegal content available.

“If [social media companies] don't put sufficient safety measures in place, they should be held legally accountable,” Mr. Durbin said during a Senate Judiciary subcommittee meeting in June.

Ms. Powell said she believes Congress has a responsibility to do more to keep children safe from abuse.

“Laws need to keep up with the constant evolution of technology,” she said, adding that law enforcement also needs tools to help them work faster.

Improving NCMEC

Reps. Ann Wagner (R-Mo.) and Sylvia Garcia (D-Texas) recently introduced the Child Online Safety Modernization Act of 2023 to fill the gaps in how CSAM is reported, ensuring criminals can be held accountable.

Currently, there are no requirements regarding what online platforms must include in a report to the NCMEC's CyberTipline, often leaving the organization and law enforcement without enough information to locate and rescue the child. In 2022, that amounted to about 50 percent of reports being untraceable.

In addition, the law does not mandate that online platforms report instances of child sex trafficking and enticement.

According to a 2022 report by Thorn, the majority of CyberTipline reports submitted by the tech industry contained such limited information that it was impossible for NCMEC to identify where the offense took place, and therefore the organization could not notify the appropriate law enforcement agency.

The Child Online Safety Modernization Act bolsters the NCMEC CyberTipline by 1) requiring reports from online platforms to include information to identify and locate the child depicted and disseminator of the CSAM; 2) requiring online platforms to report instances of child sex trafficking and the sexual enticement of a child; and 3) allowing NCMEC to share technical identifiers associated with CSAM to nonprofits.

The bill also requires that the reports be preserved for an entire year, giving law enforcement the time they need to investigate the crimes.

Stefan Turkheimer, interim vice president for public policy at RAINN (Rape, Abuse & Incest National Network), said Ms. Wagner’s bill is crucial to aiding law enforcement to successfully investigate CSAM reports.

“The Child Online Safety Modernization Act is a step towards greater cooperation between law enforcement and internet service providers that will support the efforts to investigate, identify, and locate the children depicted in child sexual abuse materials,” Turkheimer said in a recent press statement.

Making this improvement is crucial to stopping the sexual exploitation of children, said Ms. Powell.

“In our collaborations with law enforcement, SOSA has seen a perpetrator go from the very first message to arriving at a minor’s house for sex in under two hours. Anyone with the propensity to harm children can do so quickly and easily from anywhere in the world just through internet access,” she said.

https://ift.tt/sF9vMnI

from ZeroHedge News https://ift.tt/sF9vMnI

via IFTTT

0 comments

Post a Comment